Credal: Control how AI uses your data

Credal protects your organization's sensitive data from AI that can surface it externally. Think Okta for AI.

Hi there! Ravin and Jack here from Credal.ai

TL;dr:

Credal protects your sensitive data across all AI apps. We give everyone at your company access to the latest AI models via our secure Chat UI, integrated with your data, whilst management gets granular control over what data is shared with each provider.

⚔️ The Problem:

CEOs of every company from Microsoft to our YC W23 batchmates understand the massive productivity gains available through AI applications. But huge concerns exist over adopting these tools at the enterprise: what’s happening to our data once we hand it over to AI providers? The landscape of providers is already large and fast-growing. Many have terms of service that permit training general models on your data. As the usage of these tools explode, businesses risk losing control over what data has been shared with whom, when, and what technical and contractual safeguards are in place to protect that data from inadvertently ending up in the hands of competitors via an AI model’s training dataset.

⚡ Solution:

Credal is the gatekeeper for your data, ensuring that all data sent to AI is protected by 3 layers of security:

- Credal identifies sensitive data using our (self-hosted) detection models alongside your existing data classifications and lets you automatically redact, anonymize, block, or simply warn the user, if sensitive data is about to be sent over to a provider.

- For the major endpoints like chat or text completion, Credal allows you to seamlessly switch between providers: letting you take advantage of the latest models, or highest privacy models as they are released, without locking your company into a single vendor.

- Finally, Credal protects you with standardized Terms of Service and MSAs that ensure you have total control over your data. Credal links your audit logs to the specific provider associated with each request, allowing full transparency into both the technical and contractual safeguards that govern how any cut of data could be used.

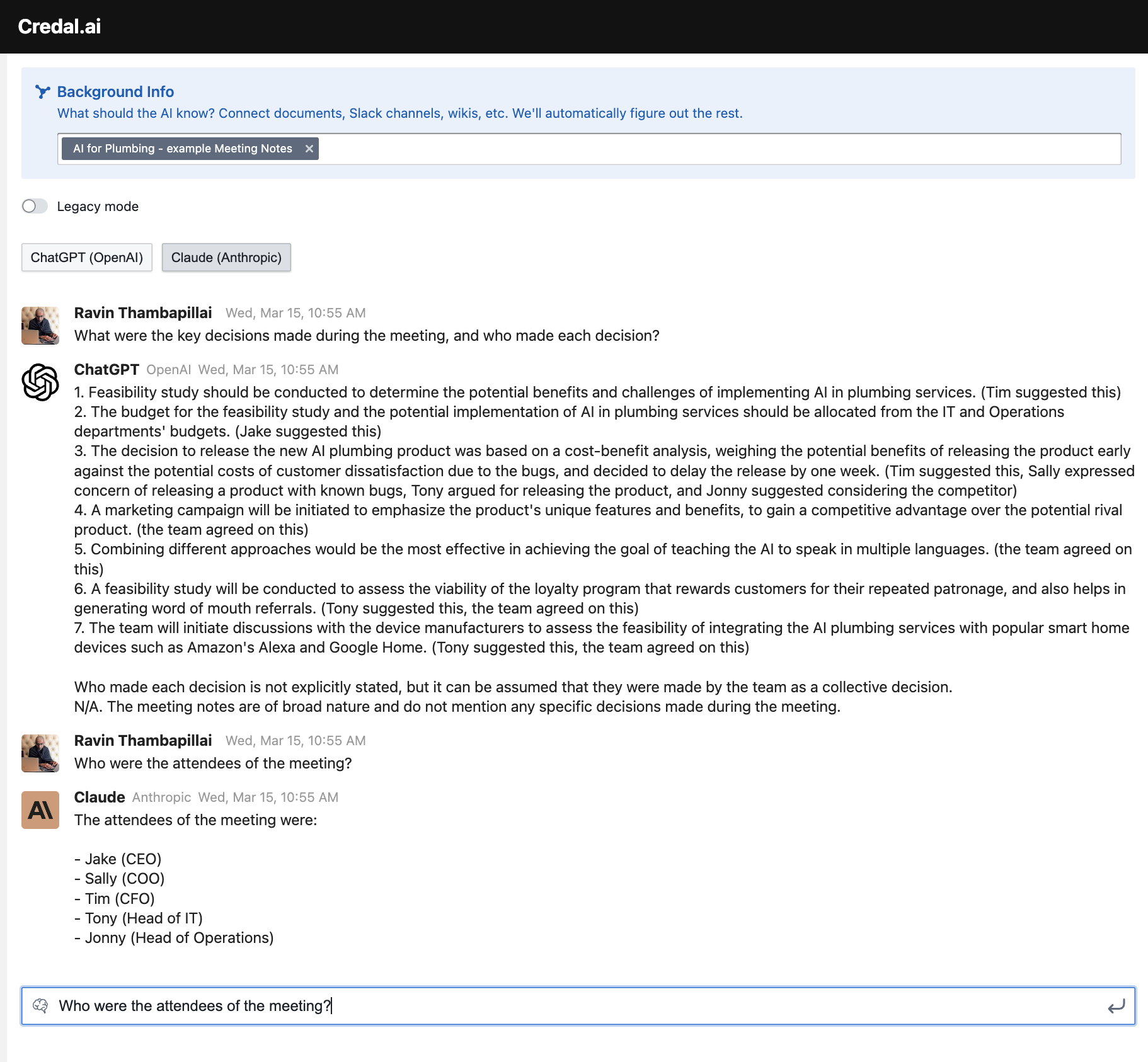

Our secure chat UI can automatically route your request to the best AI for your question (or you can specify manually)

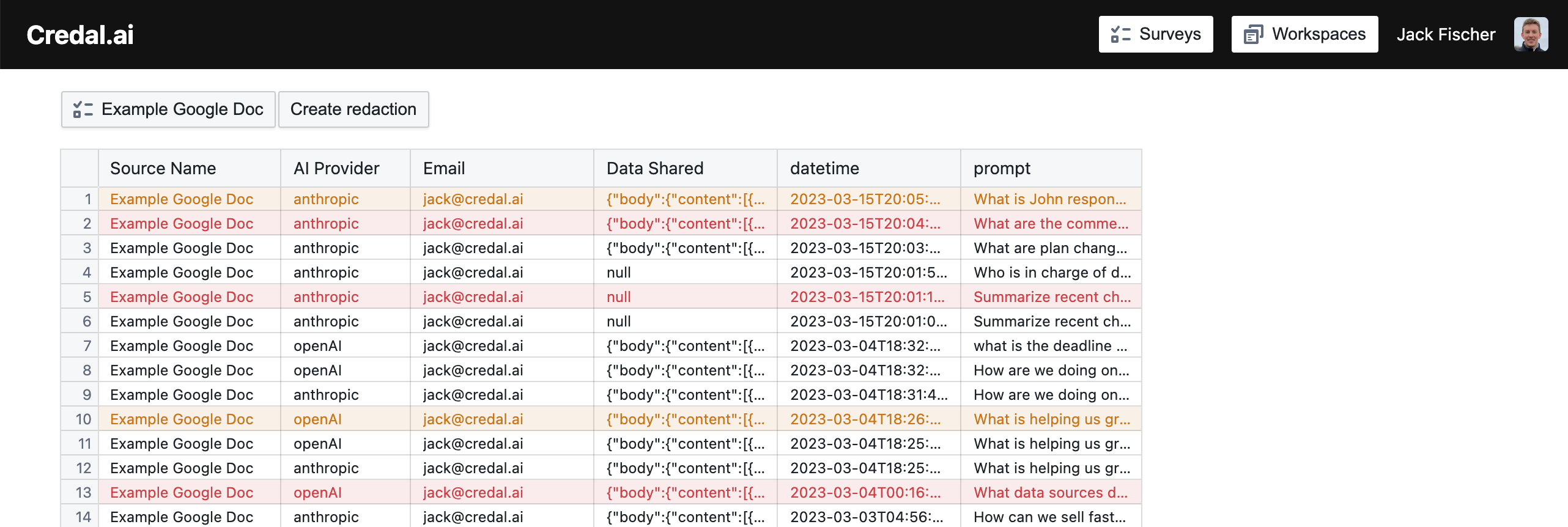

With granular audit logs on each request, per data source, including what was redacted:

Who needs this, and why did we build it?

Our current customers are security-conscious companies trying to use AI to get an edge in their operations and product. We noticed early on building AI applications that customers were really nervous about handing data over to AI models they didn’t fully understand. Many enterprises were turning ChatGPT off entirely, or banning its use on anything remotely sensitive. Today, our customers use Credal for a range of problems, from basic things like using ChatGPT for summarizing meeting notes, to using complicated combinations of AI (like Claude + GPT 4) to automatically structure company documents. Either way, Credal is giving our customers the confidence they need to use cutting-edge AI on sensitive data.

Meet the Team:

Jack and I met in 2019 when we co-led Palantir’s engagement with a multibillion-dollar Life Sciences conglomerate, steering it from an initial pilot to an enterprise deal so critical to their business that Palantir going bankrupt was mentioned as a risk on the customer’s S1 filing.

I started my career at Google, and since then have been both in the trenches and leading teams at THG at Series A, GoCardless at Series A, and Palantir. Jack started his career at H1 (at pre-seed), before joining Palantir. We’ve both led highly acclaimed teams at Palantir: Atlantic Magazine called the output of work I led for 18 months: “America’s most reliable pandemic data”[1] and the Washington Post said of a team Jack led for a year: “With these systems aiding brave Ukrainian troops, the Russians probably cannot win”[2]

We’re bringing a wealth of expertise in handling the most sensitive data imaginable, into what we see as one of the central problems of our time: building trust in AI.

Interested?

If you are an enterprise with sensitive data, but you want to be able to use that data with AI - grab time to chat with us. Enterprises that sign up before Demo Day (April 5th) are eligible for 50% off!

Footnotes:

[1] https://www.theatlantic.com/health/archive/2021/01/hhs-hospitalization-pandemic-data/617725/ (The article discusses whether the Biden administration would keep paying for the ‘vital system’ that HHS had procured under Trump. In case you’re wondering what happened, it did and even expanded the contract.

[2] https://www.washingtonpost.com/opinions/2022/12/19/palantir-algorithm-data-ukraine-war/