Athina AI: The Ultimate Monitoring and Evaluation Platform for LLM Developers

A suite of tools to supercharge LLM development and help you ship high-performing, reliable AI applications

TL;DR: Athina helps you monitor and evaluate your LLM powered app. Plug and play evals in production. 5 minute setup.

—-

👋 Hey everyone! We’re thrilled to announce the launch of Athina AI, a suite of tools for LLM developers to ship and develop AI products with confidence.

What is Athina AI?

Athina AI is a Monitoring & Evaluation platform for LLM developers.

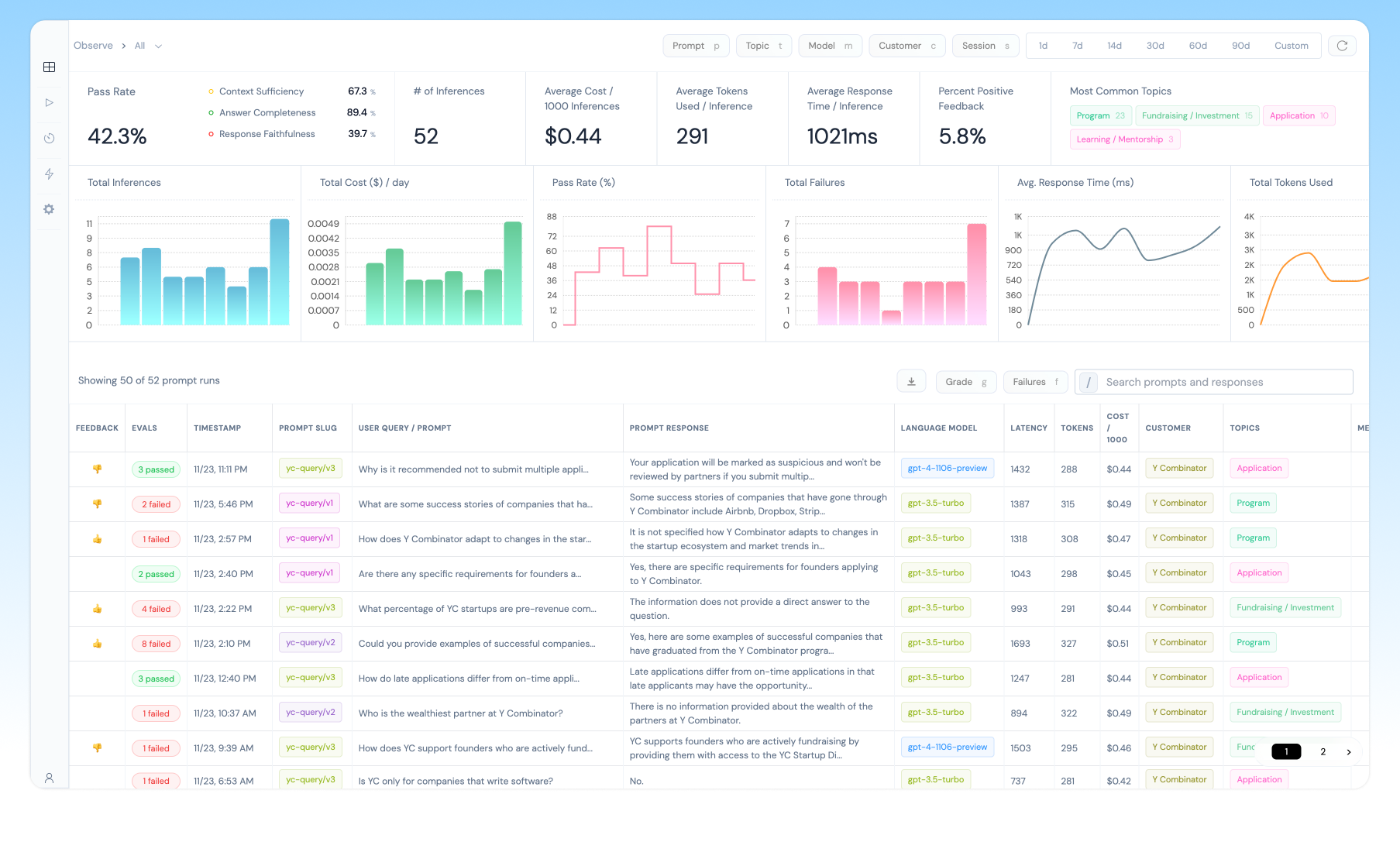

Developers use Athina’s evaluation framework and production monitoring platform to improve the performance and reliability of AI applications through real-time monitoring, analytics, and automatic evaluations.

🔴 The Problem

- It is difficult to measure the quality of Generative AI responses.

- Eyeballing responses is tedious, leading to slow development cycles

- No easy way to detect unreliable or bad outputs (especially in production).

- Difficult to make changes to prompt or retrieval pipeline with confidence without introducing regressions.

- Poor visibility into all the steps in your LLM inference pipeline

LLM developers typically have to build and maintain lots of in-house infrastructure for monitoring and evaluation.

🟢 Our Solution: Athina AI

Athina is a comprehensive suite of tools to supercharge your LLM development lifecycle and help you ship high-performing, reliable AI applications with confidence.

- Quick Setup: Get started in just 5 minutes! The entire integration is 1 simple POST request (and we don’t interfere with your LLM calls)

- Comprehensive Monitoring Platform: Full visibility into your LLM touchpoints. Search, sort, filter, compare, debug.

- Prebuilt Evaluations:

- You can configure automatic evaluations in just a few clicks - use one of our preset evals or define a custom eval.

- These evals will run against logged inferences automatically.

- You can also use our open-source library to run evals and iterate rapidly during development.

- Granular Analytics:

- Tracks usage metrics like response time, cost, token usage, feedback, and more.

- Athina also track metrics from the evals, like Faithfulness, Answer Relevance, Context Sufficiency, etc

- You can segment these metrics by any property: customer ID, environment, model, prompt, etc.

- For example, you could use Athina to see how prompt/v4 is performing for customer ID nike-usa and how gpt-4 performance compares to a llama-finetune.

Who is this for?

Athina is designed for developers building AI products.

If you’re in the prototyping or development stage, you can use Athina to get visibility and rapidly test the LLM generated responses.

If you have launched your AI in production, you can use Athina to monitor and evaluate your LLM in production.

🌟 Our Story

As a team of engineers and hackers, we spent a summer trying to build various LLM-powered applications for developers.

While working with LLMs, we found that the most challenging part was evaluating the Generative AI output and systematically improving model performance.

On speaking with other AI developers, we discovered a major gap in the tools that engineers need to effectively build production grade applications using LLMs, and set out to solve this problem.

🚀 Get Started

Athina AI is a comprehensive suite of tools to supercharge your LLM development lifecycle and help you ship high-performing, reliable AI applications with confidence.

Here’s how you can get started:

- 🌟 Sign up for a free account at app.athina.ai

- Log your inferences using this guide.

- Try our open source evals.

- Schedule a call with us