Call every LLM API like it's OpenAI [100+ LLMs]

Hello, I’m Ishaan - one of the maintainers of LiteLLM.

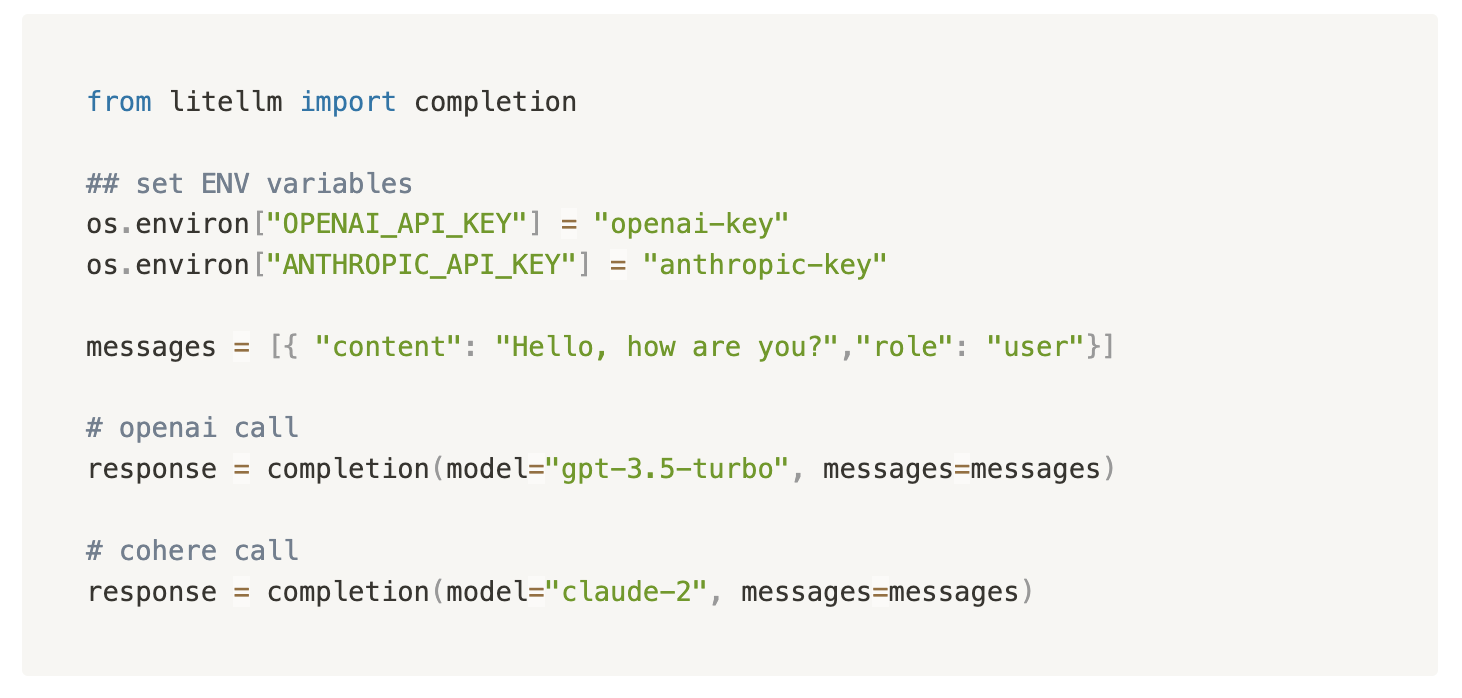

TLDR; LiteLLM let’s you call all LLM APIs (Azure, Anthropic, Replicate, etc.) using the OpenAI format. We translate the inputs, standardize exceptions, and guarantee consistent outputs for completion() and embedding() calls

Problem ❌: Multiple LLM APIs - Hard to debug

Calling LLM APIs involved multiple ~100 line if/else statements which made our debugging problems explode.

I remember when we added Azure and Cohere to our chatbot. Azure’s API calls would fail so we implemented model fallbacks - (e.g. if Azure fails, try Cohere then OpenAI etc.). However, provider-specific logic meant our code became increasingly complex and hard to debug.

Solution 💡

1️⃣ Simplify calling existing LLM APIs

That’s when we decided to abstract our LLM calls behind a single package - LiteLLM. We needed I/O that just worked, so we could spend time improving other parts of our system (error-handling/model-fallback logic, etc.).

LiteLLM does 3 things really well:

- Consistent I/O: It removes the need for multiple if/else statements.

- Reliable: Extensively tested with 50+ cases and used in our production environment.

- Observable: Integrations with Sentry, Posthog, Helicone, etc.

2️⃣ Easily add new LLM APIS - LiteLLM UI

The next big challenge was adding new LLM APIs. Each addition involved 3 changes:

- Update list of available models users can call from

- Adding key to our secret manager / .env file

- Mapping the model name - e.g.

replicate/llama2-chat-...to a user-facing aliasllama2.

Since LiteLLM integrates with every LLM API - we provide all of this out of the box with zero configuration. With a single environment variable - LITELLM_EMAIL you can automatically add 100+ new LLM API integrations into your production server, without modifying code / redeploying changes 👉 LiteLLM UI

Ask 👀:

- Adding new LLM providers? Contact us at krrish@berri.ai if you need help!

- ⭐️ us on GitHub to keep up with releases and news.

- Join our Discord!

Describe what your company does in 50 characters or less.

Clerkie is an AI tool that helps you debug code

What is your company going to make? Please describe your product and what it does or will do.

We believe that LLM’s are good simulators of agents. Keeping this paradigm in mind, one way this could create value is by trying to simulate a friend you reach out to when you’re stuck on a problem while programming. Thus, we’re aiming to create value within the ‘buddy problems’. i.e. the class of problems that you hit while programming, that require you to reach out to a friend/online community, to seek help to debug.